- Introduction

- Thread Class vs Runnable Interface

- Creating a Thread

- Creating Multiple Threads

- Using isAlive() and join()

- Thread Priorities

- Thread Class Methods

- Synchronization

- Inter-Thread Communication

- Deadlock

- Suspending, Resuming, and Stopping Threads

- Understanding the process

- Thread’s State

- Deamon Thread

- Common Tasks - FAQ Code

- Java Runtime

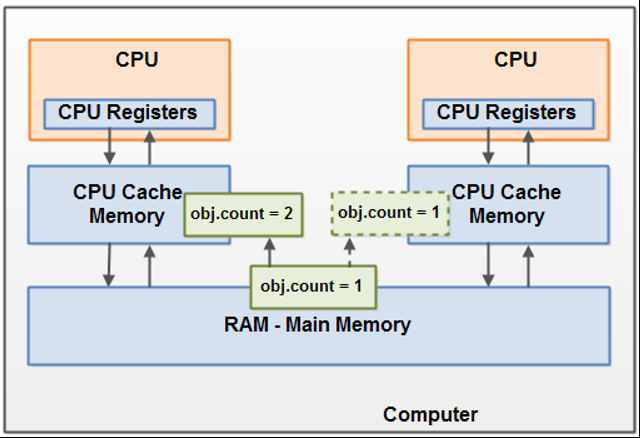

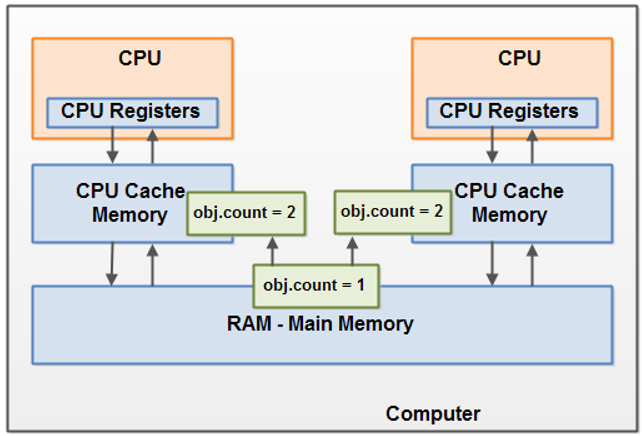

- Java Concurrency

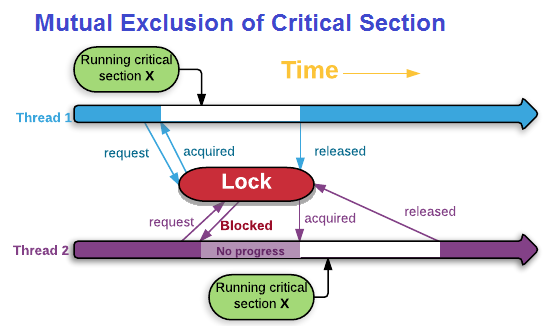

- Using locks solves the problem. However, performance takes a hit.

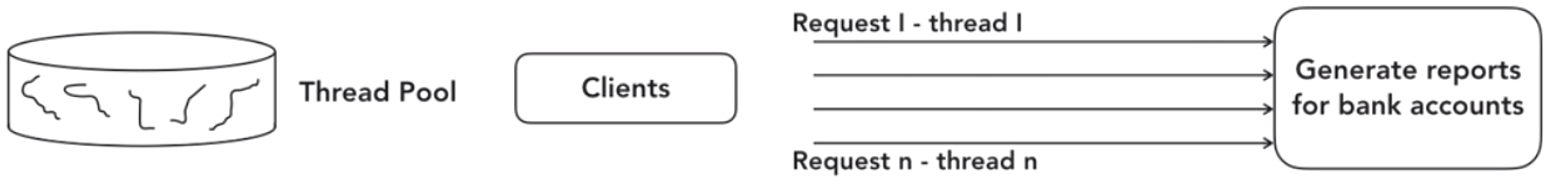

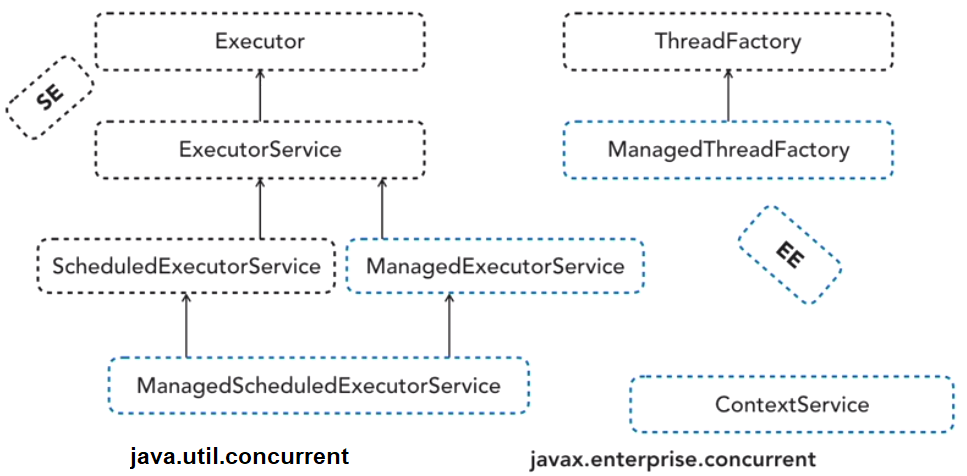

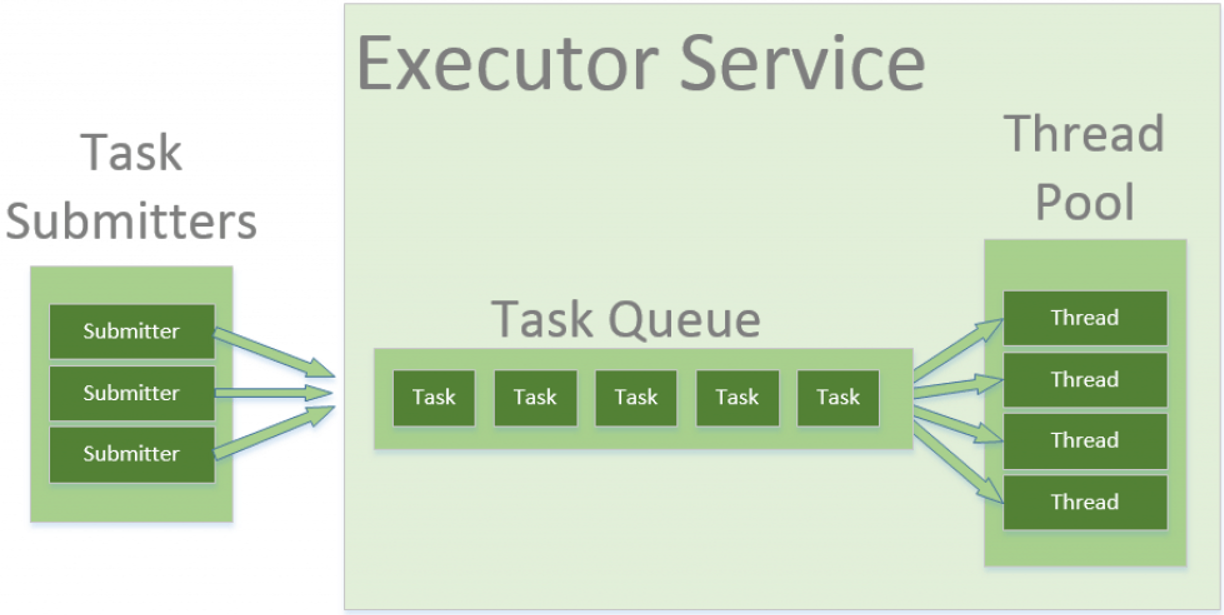

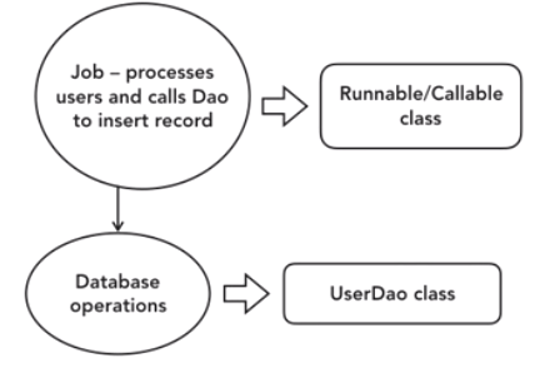

- Executor API

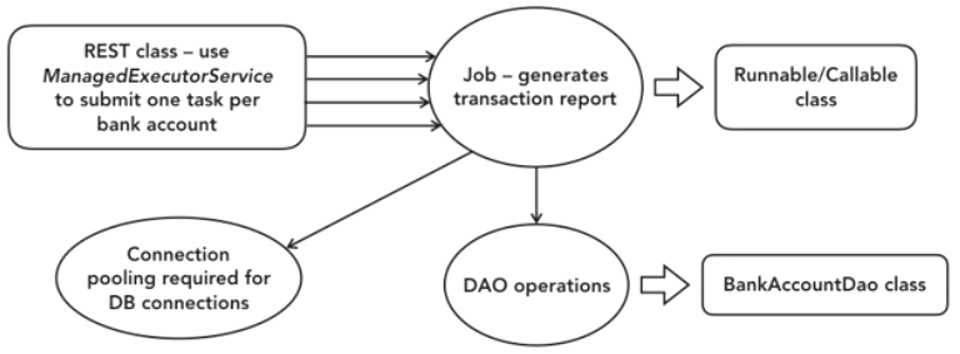

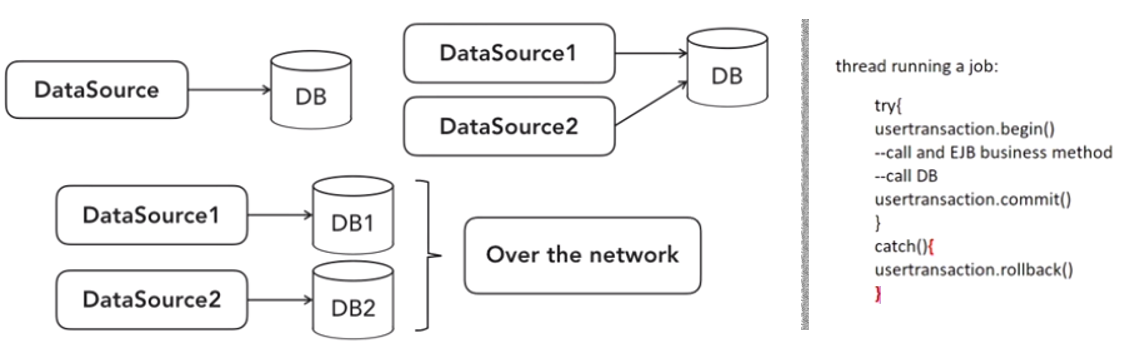

- Java EE Concurrency API

- Multithreading FAQ

- How can we achieve thread safety in Java?

- What is Java Thread Dump, How can we get Java Thread dump of a Program?

- What is Deadlock? How to analyse and avoid deadlock situation?

- What is Java Timer Class? How to schedule a task to run after specific interval?

- What is Lock interface in Java Concurrency API? What are it’s benefits over synchronization?

- What is BlockingQueue? How can we implement Producer-Consumer problem using Blocking Queue?

- What is FutureTask Class?

- What are Concurrent Collection Classes?

- What is Executors Class?

- What are some of the improvements in Concurrency API in Java 8?

- Differences between concurrency vs. parallelism?

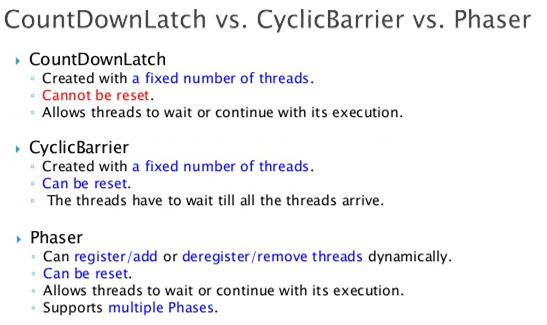

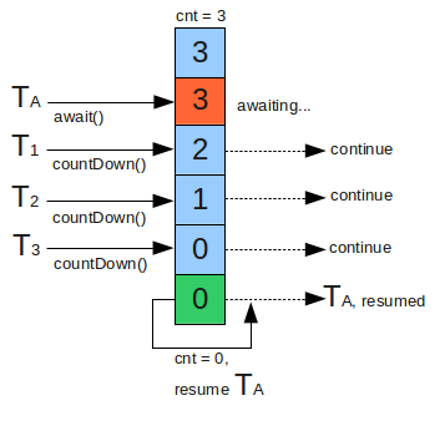

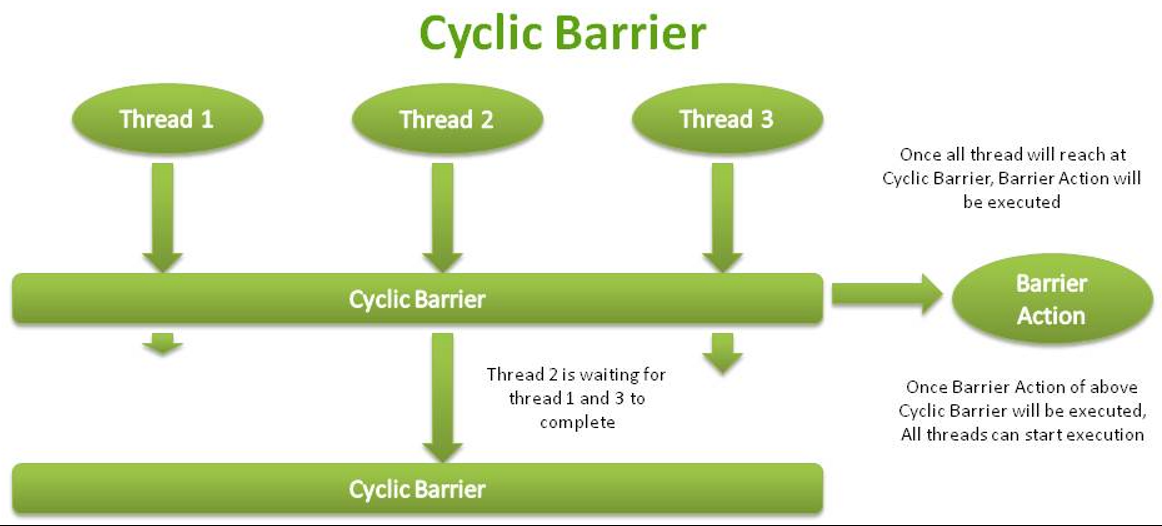

- CountDownLatch and CyclicBarrier and Phaser

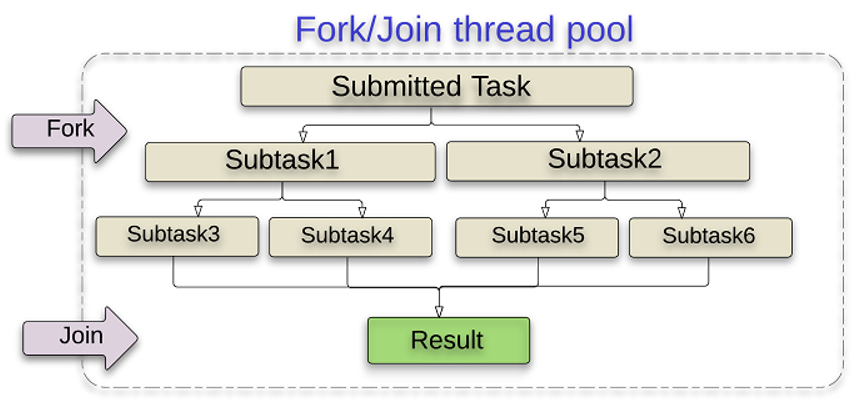

- Fork Join Framework

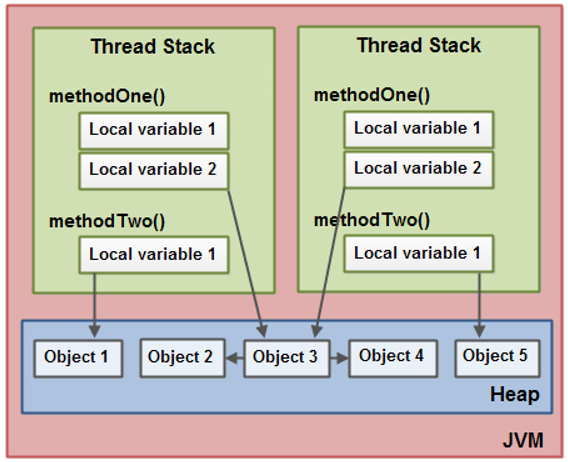

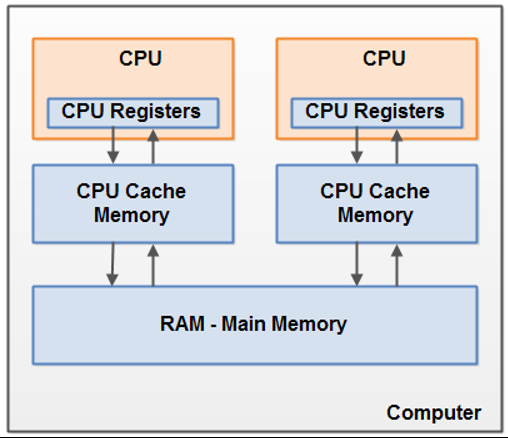

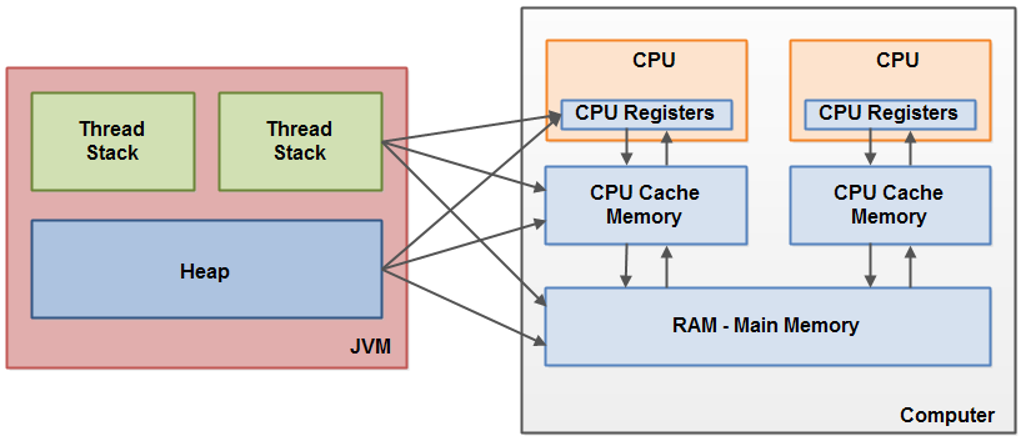

- Java Memory Model

- TODO

Introduction

- Process

- heavyweight tasks that require their own separate address spaces.

- Interprocess communication and Context switching are costly.

- Thread

- Lightweight tasks that share the same address space in a process.

- Interthread communication and Context switching are costly.

- Multiprogramming – A computer running more than one program at a time (like running Excel and Firefox simultaneously).

- Multiprocessing – A computer using more than one CPU at a time.

- Multitasking – Tasks sharing a common resource (like 1 CPU).

- Multithreading is an extension of multitasking. Thread is used to achieve multitasking.

| Process-based multitasking | Thread-based multitasking |

|---|---|

| allows your computer to run two or more programs concurrently. | thread is the smallest unit of dispatchable code. |

| A program is the smallest unit of code that can be dispatched by the scheduler. | a single program can perform two or more tasks simultaneously. |

| deals with the “big picture”. | handles the details. |

| Ex. text editor can format text at the same time word suggestions can be made. |

- Multithreading helps you reduce this idle time because another thread can run when one is waiting.

Java Thread Model

- Threads enable the entire environment to be asynchronous ie. increase efficiency by using idle CPU cycles.

- Benefit - loop/polling mechanism is eliminated.

- One thread can pause without stopping other parts of your program.

- Idle time - when a thread reads data from network or waits for user input.

Single vs Multi Core

- Single core system, concurrently executing threads share the CPU, with each thread receiving a slice of CPU time.

- two or more threads do not actually run at the same time, but idle CPU time is utilized.

- Multi-core systems, two or more threads actually execute simultaneously.

Thread Class vs Runnable Interface

- Thread encapsulates a thread of execution. Since you can’t directly refer to the ethereal state of a running thread, you will deal with it through its proxy, the Thread instance that spawned it.

Main Thread

- On program start-up, main thread begins running immediately, because it is the one that is executed when your program begins.

- The main thread is important for two reasons:

- other “child” threads will be spawned from it.

- performs various shutdown actions, must be the last thread to finish execution.

- main thread can be controlled using Thread object.

Thread t = Thread.currentThread();

static void sleep(long milliseconds, int nanoseconds) throws InterruptedException

final void setName(String threadName)

final String getName( )

- sleep( ) might throw an InterruptedException if some other thread wanted to interrupt this sleeping one. main thread default name is main, priority 5, and thread-group name main.

| Method | Meaning |

|---|---|

| getName | Obtain a thread’s name. |

| getPriority | Obtain a thread’s priority. |

| isAlive | Determine if a thread is still running. |

| join | Wait for a thread to terminate. |

| run | Entry point for the thread. |

| sleep | Suspend a thread for a period of time. |

| start | Start a thread by calling its run method. |

- A thread group is a data structure that controls the state of a collection of threads as a whole.

Creating a Thread

Implementing Runnable

Thread()

Thread(String name)

Thread(Runnable r)

Thread(Runnable threadOb, String threadName)

class NewThread implements Runnable {

Thread t;

NewThread() {

t = new Thread(this, "Demo Thread");

System.out.println("Child thread: " + t);

t.start();

}

// run method implemented as below

}

public void run() {

try {

for (int i = 5; i > 0; i--) {

System.out.println("Child Thread: " + i);

Thread.sleep(500);

}

} catch (InterruptedException e) {

System.out.println("Child interrupted.");

}

System.out.println("Exiting child thread.");

}

Extending Thread

class NewThread extends Thread {

NewThread() {

super("Demo Thread");

System.out.println("Child thread: " + this);

start();

}

// run method implemented as above

}

- The extending class must override the run( ), which is the entry point for the new thread.

- It must also call start( ) to begin execution of the new thread.

- start( ) executes a call to run( ).

class ExtendThread {

public static void main(String args[]) {

Thread t = new NewThread();

try {

for (int i = 5; i > 0; i--) {

System.out.println("Main Thread: " + i);

Thread.sleep(1000);

}

} catch (InterruptedException e) {

System.out.println("Main thread interrupted.");

}

System.out.println("Main thread exiting.");

}

}

Choosing an Approach

- Should be extended only when modified in some way. if not overriding Thread’s methods, implement Runnable.

- By implementing Runnable, your thread class is free to inherit a different class.

Creating Multiple Threads

class NewThread implements Runnable {

String name;

Thread t;

NewThread(String threadname) {

name = threadname;

t = new Thread(this, name);

System.out.println("New thread: " + t);

t.start();

}

@Override

public void run() {

try {

for (int i = 3; i > 0; i--) {

System.out.println(name + ": " + i);

Thread.sleep(1000);

}

} catch (InterruptedException e) {

System.out.println(name + "Interrupted");

}

System.out.println(name + " exiting.");

}

}

class MultiThreadDemo {

public static void main(String args[]) {

new NewThread("One");

new NewThread("Two");

new NewThread("Three");

try {

Thread.sleep(10000);

} catch (InterruptedException e) {

System.out.println("Main thread Interrupted");

}

System.out.println("Main thread exiting.");

}

}

- If main thread finishes before a child thread has completed, then the Java run-time system may “hang”.

Using isAlive() and join()

- Two ways exist to determine whether a thread has finished.

- isAlive() on thread, true if still running. but it is occasionally useful.

- join() - Currently running threads stop executing until the thread it joins with completes its task.

final void join( ) throws InterruptedException

final public void join(long milliseconds) throws InterruptedException

class DemoJoin {

public static void main(String args[]) {

NewThread ob1 = new NewThread("One");

NewThread ob2 = new NewThread("Two");

NewThread ob3 = new NewThread("Three");

System.out.println("Thread One is alive: "+ ob1.t.isAlive());

System.out.println("Thread Two is alive: "+ ob2.t.isAlive());

System.out.println("Thread Three is alive: "+ ob3.t.isAlive());

try {

System.out.println("Waiting for threads to finish.");

ob1.t.join(); ob2.t.join(); ob3.t.join();

} catch (InterruptedException e) {

System.out.println("Main thread Interrupted");

}

System.out.println("Thread One is alive: "+ ob1.t.isAlive());

System.out.println("Thread Two is alive: "+ ob2.t.isAlive());

System.out.println("Thread Three is alive: "+ ob3.t.isAlive());

System.out.println("Main thread exiting.");

}

}

// Output

New thread: Thread[One,5,main]

New thread: Thread[Two,5,main]

New thread: Thread[Three,5,main]

Thread One is alive: true

Thread Two is alive: true

Thread Three is alive: true

Waiting for threads to finish.

One: 2

Two: 2

Three: 2

One: 1

Two: 1

Three: 1

Two exiting.

Three exiting.

One exiting.

Thread One is alive: false

Thread Two is alive: false

Thread Three is alive: false

Main thread exiting

Thread Priorities

- A thread’s priority is used to decide when to switch from one running thread to the next, context switch.

- The rules that determine when a context switch takes place are simple:

- A thread can voluntarily relinquish control

- explicitly yielding, sleeping, or blocking on pending I/O.

- Now, all other threads are examined, and the highest-priority thread that is ready to run is given the CPU.

- A thread can be preempted by a higher-priority thread

- Basically, as soon as a higher-priority thread wants to run, it does. This is called preemptive multitasking.

- A thread can voluntarily relinquish control

- When same priority threads are competing for CPU cycles, they are time-sliced automatically in round-robin fashion.

final void setPriority(int level)

final int getPriority( )

- static final variables in Thread class - MIN_PRIORITY(1), MAX_PRIORIT(10) and NORM_PRIORITY(5).

- Relying on preemptive behaviour causes most of the inconsistencies, instead of cooperatively giving up CPU time.

- To obtain predictable cross-platform behaviour, use threads that voluntarily give up control of the CPU.

Thread Class Methods

Why Thread sleep() and yield() methods are static?

- Thread sleep() and yield() work on the currently executing thread, and not invoked on threads in wait state.

- These methods are made static so that when this method is called statically, it works on the current executing thread and avoid confusion to the programmers who might think that they can invoke these methods on some non-running threads.

| Thread Methods | |

|---|---|

| public void run() | is used to perform action for a thread. |

| public void start() | starts the execution of the thread. JVM calls the run() method on the thread. |

| public void sleep(long miliseconds) | Causes the currently executing thread to sleep (temporarily cease execution) for the specified number of milliseconds. |

| public void join() | waits for a thread to die. |

| public void join(long miliseconds) | waits for a thread to die for the specified miliseconds. |

| public int getPriority() | returns the priority of the thread. |

| public int setPriority(int priority) | changes the priority of the thread. |

| public String getName() | returns the name of the thread. |

| public void setName(String name) | changes the name of the thread. |

| public Thread currentThread() | returns the reference of currently executing thread. |

| public int getId() | returns the id of the thread. |

| public Thread.State getState() | returns the state of the thread. |

| public boolean isAlive() | tests if the thread is alive. |

| public void yield() | causes the currently executing thread object to temporarily pause and allow other threads to execute. |

| public void suspend() | is used to suspend the thread(depricated). |

| public void resume() | is used to resume the suspended thread(depricated). |

| public void stop() | is used to stop the thread(depricated). |

| public boolean isDaemon() | tests if the thread is a daemon thread. |

| public void setDaemon(boolean b) | marks the thread as daemon or user thread. |

| public void interrupt() | interrupts the thread. |

| public boolean isInterrupted() | tests if the thread has been interrupted. |

| public static boolean interrupted() | tests if the current thread has been interrupted. |

Synchronization

- Synchronization - ensures that resource will be used by only one thread at a time.

- Monitor - an object used as a mutually exclusive lock.

- Every object has a lock associated with it. Only one thread can own a monitor at a given time.

- thread acquires a lock; it has entered the monitor.

- other threads will be waiting for the monitor ie. suspended until the first thread exits the monitor.

- thread owning a monitor can re-enter the same monitor if it so desires.

- Java’s messaging system allows a thread to enter a synchronized method on an object, and then wait there until some other thread explicitly notifies it to come out.

Synchronized Methods

- To enter an object’s monitor, just call a method that has been modified with the synchronized keyword.

- While a thread is inside a synchronized method, all other threads that try to call it (or any other synchronized method) on the same instance have to wait.

- To exit the monitor and relinquish control of the object to the next waiting thread, the owner of the monitor simply returns from the synchronized method.

- In this program, nothing exists to stop all three threads from calling the same method, on the same object, at the same time. This is known as a race condition, because the three threads are racing each other to complete the method.

- Any time that you have a method, or group of methods, that manipulates the internal state of an object in a multithreaded situation, you should use the synchronized keyword to guard the state from race conditions.

class Callme {

synchronized void call(String msg) { ...}

}

Output - [Hello][Synchronized][World]

- Once a thread enters any synchronized method on an instance, no other thread can enter any other synchronized method on the same instance. However, non-synchronized methods on that instance will continue to be callable.

Synchronized Statement

- Suppose, you want to synchronize access to objects of a class that was not designed for multithreaded access or class was not created by you, but by a third party, and you do not have access to the source code. Thus, you can’t add synchronized to the appropriate methods within the class.

- A synchronized block ensures that a call to a synchronized method that is a member of objRef’s class occurs only after the current thread has successfully entered objRef’s monitor.

synchronized(objRef) {

objRef.call(msg); // statements to be synchronized

}

Current Output : Hello[Synchronized[World] ] ]

Static Synchronization

If you make any static method as synchronized, the lock will be on the class not on object.

Problem without static synchronization

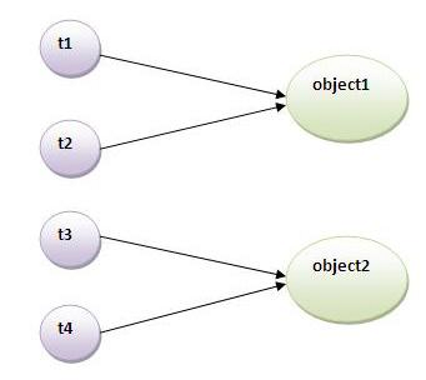

- Suppose there are two objects of a shared class (e.g. Table with static variable) named object1 and object2. In case of synchronized method and synchronized block there cannot be interference between t1 and t2 or t3 and t4 because t1 and t2 both refers to a common object that have a single lock.

- But there can be interference between t1 and t3 or t2 and t4 because t1 acquires another lock and t3 acquires another lock.

- When we want no interference between t1 and t3 or t2 and t4, Static synchronization can solve this problem.

Which is more preferred – Synchronized method or Synchronized block?

- Synchronized block is more preferred way

- because it doesn’t lock the Object, synchronized methods lock the Object.

- if there are multiple synchronization blocks in the class, even though they are not related, it will stop them from execution and put them in wait state to get the lock on Object.

class Program {

public synchronized void f() { ......... }

}

// is equivalent to ...

class Program {

public void f() {

synchronized(this){ ... }

}

}

public class Program {

private static Object locker1 = new Object();

private static Object locker1 = new Object();

public void doSomething1() {

synchronized(locker1) { ......... //do something protected; }

}

public void doSomething2() {

synchronized(locker2) { ......... //do something protected; }

}

}

Inter-Thread Communication

- Multithreading replaces event loop programming by dividing your tasks into discrete, logical units.

- Threads also provide a secondary benefit: they do away with polling.

- Polling is usually implemented by a loop that is used to check some condition repeatedly. Once the condition is true, appropriate action is taken. This wastes CPU time.

Example - Classic Queuing Problem

- Here one thread is producing some data and another is consuming it. To make the problem more interesting, suppose that the producer has to wait until the consumer is finished before it generates more data.

- To avoid polling, Java includes an elegant interprocess communication mechanism via the wait( ), notify( ), and notifyAll( ) methods. These methods are implemented as final methods in Object, so all classes have them.

- All three methods can be called only from within a synchronized context.

- wait( ) tells the calling thread to give up the monitor and go to sleep until some other thread enters the same monitor and calls notify( ) or notifyAll( ).

- notify( ) wakes up a thread that called wait( ) on the same object.

- notifyAll( ) wakes up all the threads that called wait( ) on the same object. One of the threads will be granted access.

final void wait( ) throws InterruptedException

final void notify( )

final void notify All( )

- Although wait( ) normally waits until notify( ) or notifyAll( ) is called, there is a possibility that in very rare cases the waiting thread could be awakened due to a spurious wakeup. In this case, a waiting thread resumes without notify( ) or notifyAll( ) having been called. (In essence, the thread resumes for no apparent reason.)

- Because of this remote possibility, Oracle recommends that calls to wait( ) should take place within a loop that checks the condition on which the thread is waiting.

Correct Implementation of a Producer and Consumer problem

class Q {

int n;

boolean valueSet = false;

//syncronized get method on q

synchronized int get() {

while (!valueSet) {

try {

wait();

} catch (InterruptedException e) {

System.out.println("InterruptedException caught");

}

}

System.out.println("Got: " + n);

valueSet = false;

notify();

return n;

}

//syncronized put method on q

synchronized void put(int n) {

while (valueSet) {

try {

wait();

} catch (InterruptedException e) {

System.out.println("InterruptedException caught");

}

}

this.n = n;

valueSet = true;

System.out.println("Put: " + n);

notify();

}

}

class Producer implements Runnable {

Q q;

Producer(Q q) {

this.q = q;

new Thread(this, "Producer").start();

}

public void run() {

int i = 0;

while (true) {

q.put(i++);

}

}

}

class Consumer implements Runnable {

Q q;

Consumer(Q q) {

this.q = q;

new Thread(this, "Consumer").start();

}

public void run() {

while (true) {

q.get();

}

}

}

class PCFixed {

public static void main(String args[]) {

Q q = new Q();

new Producer(q);

new Consumer(q);

System.out.println("Press Control-C to stop.");

}

}

Deadlock

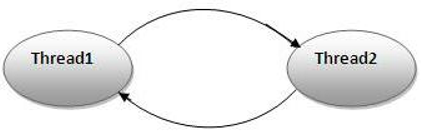

- Deadlock occurs when two threads have a circular dependency on a pair of synchronized objects.

- For example, suppose one thread enters the monitor on object X and another thread enters the monitor on object Y. If the thread in X tries to call any synchronized method on Y, it will block as expected. However, if the thread in Y, in turn, tries to call any synchronized method on X, the thread waits forever, because to access X, it would have to release its own lock on Y so that the first thread could complete.

- Deadlock is a difficult error to debug for two reasons:

- In general, it occurs only rarely, when the two threads time-slice in just the right way.

- It may involve more than two threads and two synchronized objects.

// An example of deadlock.

class A {

synchronized void foo(B b) {

String name = Thread.currentThread().getName();

System.out.println(name + " entered A.foo");

try {

Thread.sleep(1000);

} catch (Exception e) {

System.out.println("A Interrupted");

}

System.out.println(name + " trying to call B.last()");

b.last();

}

synchronized void last() {

Sysout("Inside A.last");

}

}

class B {

synchronized void bar(A a) {

String name = Thread.currentThread().getName();

System.out.println(name + " entered B.bar");

try {

Thread.sleep(1000);

} catch (Exception e) {

Sysout("B Interrupted");

}

System.out.println(name + " trying to call A.last()");

a.last();

}

synchronized void last() {

Sysout("Inside B.last");

}

}

class Deadlock implements Runnable {

A a = new A();

B b = new B();

Deadlock() {

Thread.currentThread().setName("MainThread");

Thread t = new Thread(this, "RacingThread");

t.start();

a.foo(b); // get lock on A in this thread.

System.out.println("Back in main thread");

}

public void run() {

b.bar(a); // get lock on B in other thread.

System.out.println("Back in other thread");

}

public static void main(String args[]) {

new Deadlock();

}

}

// Output

MainThread entered A.foo

RacingThread entered B.bar

MainThread trying to call B.last()

RacingThread trying to call A.last()

Because the program has deadlocked, you need to press ctrl-c to end the program. You can see a full thread and monitor cache dump by pressing ctrl-break on a PC. You will see that RacingThread owns the monitor on b, while it is waiting for the monitor on a. At the same time, MainThread owns a and is waiting to get b. This program will never complete. As this example illustrates, if your multithreaded program locks up occasionally, deadlock is one of the first conditions that you should check for.

Suspending, Resuming, and Stopping Threads

- Sometimes, suspending execution of a thread is useful.

- For example, a separate thread can be used to display the time of day, when not required, then its thread can be suspended.

- Prior to Java 2, a program used suspend( ), resume( ), and stop( ), which are methods defined by Thread, to pause, restart, and stop the execution of a thread. These are deprecated now.

- suspend( ) can sometimes cause serious system failures as locks are not relinquished.

- resume( ) method is also deprecated. As it cannot be used without the suspend( ).

- stop( ) can sometimes cause serious system failures. Assume that a thread is writing to a critically important data structure and has completed only part of its changes. If that thread is stopped at that point, that data structure might be left in a corrupted state. The trouble is that stop( ) causes any lock the calling thread holds to be released. Thus, the corrupted data might be used by another thread that is waiting on the same lock.

- A thread must be designed so that the run( ) method periodically checks to determine whether that thread should suspend, resume, or stop its own execution. Typically, this is accomplished by establishing a flag variable that indicates the execution state of the thread. As long as this flag is set to “running,” the run( ) method must continue to let the thread execute. If this variable is set to “suspend,” the thread must pause. If it is set to “stop”, the thread must terminate.

// Suspending and resuming a thread

class NewThread implements Runnable {

String name; // name of thread

Thread t;

boolean flag;

NewThread(String threadname) {

name = threadname;

t = new Thread(this, name);

System.out.println("New thread: " + t);

flag = false;

t.start(); // Start the thread

}

public void run() {

try {

for (int i = 15; i > 0; i--) {

System.out.println(name + ": " + i);

Thread.sleep(200);

synchronized(this) {

while (suspendFlag) {

wait();

}

}

}

} catch (InterruptedException e) {

System.out.println(name + " interrupted.");

}

System.out.println(name + " exiting.");

}

synchronized void mysuspend() {

flag = true;

}

synchronized void myresume() {

flag = false;

notify();

}

}

class SuspendResume {

public static void main(String args[]) {

NewThread ob1 = new NewThread("One");

NewThread ob2 = new NewThread("Two");

try {

Thread.sleep(1000);

ob1.mysuspend();

System.out.println("Suspending thread One");

Thread.sleep(1000);

ob1.myresume();

System.out.println("Resuming thread One");

ob2.mysuspend();

System.out.println("Suspending thread Two");

Thread.sleep(1000);

ob2.myresume();

System.out.println("Resuming thread Two");

} catch (InterruptedException e) {

System.out.println("Main thread Interrupted");

}

try { // wait for threads to finish

System.out.println("Waiting for threads to finish.");

ob1.t.join();

ob2.t.join();

} catch (InterruptedException e) {

System.out.println("Main thread Interrupted");

}

System.out.println("Main thread exiting.");

}

}

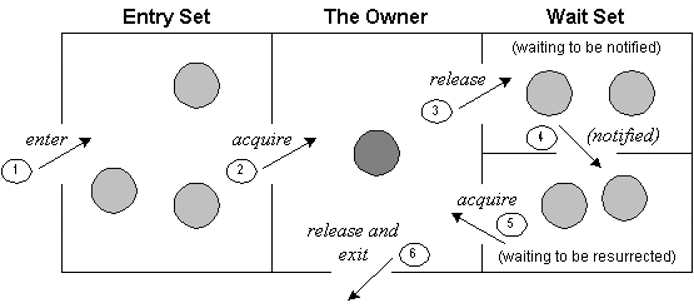

Understanding the process

- The point to point explanation of the above diagram is as follows:

- Threads enter to acquire lock.

- Lock is acquired by on thread.

- Now thread goes to waiting state if you call wait() on the object. Otherwise it releases the lock and exits.

- If you call notify() or notifyAll() method, thread moves to the notified state (runnable state).

- Now thread is available to acquire lock.

- After completion of the task, thread releases the lock and exits the monitor state of the object.

| wait() | sleep() |

|---|---|

| wait() method releases the lock | sleep() method doesn’t release the lock. |

| method of Object class | method of Thread class |

| non-static method | static method |

| Should be notified by notify() or notifyAll() methods | After the specified amount of time, sleep is completed. |

Why wait(), notify() and notifyAll() methods are defined in Object class not Thread class?

- In Java every Object has a monitor

- wait, notify methods are used to wait for the Object monitor or to notify other threads that Object monitor is free now.

- There is no monitor on threads in java and synchronization can be used with any Object, that’s why it’s part of Object class so that every class in java has these essential methods for inter thread communication.

Why wait(), notify() and notifyAll() methods have to be called from synchronized method or block?

To call these methods monitor on the Object must be acquired, that can be achieved only by synchronization, they need to be called from synchronized method or block.

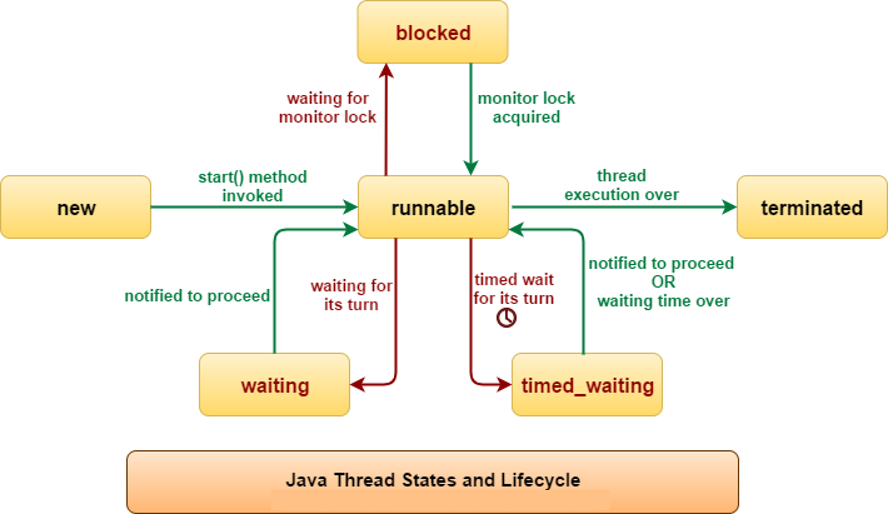

Thread’s State

Thread.State - indicates the state of the thread at the time at which the call was made (enumeration defined by Thread)

Thread.State ts = thrd.getState();

if(ts == Thread.State.RUNNABLE) // ...

| Value | State |

|---|---|

| BLOCKED | A thread that has suspended execution because it is waiting to acquire a lock. |

| NEW | A thread that has not begun execution. |

| RUNNABLE | A thread that either is currently executing or will execute when it gains access to CPU. |

| TERMINATED | A thread that has completed execution. |

| TIMED_WAITING | A thread that has suspended execution for a specified period of time, such as after sleep(). This state is also entered when a timeout version of wait() or join() is called. |

| WAITING | A thread that has suspended execution because it is waiting for some action to occur. For example, it is waiting because of a call to a non-timeout version of wait() or join(). |

- A thread’s state may change after the call to getState( ). Thus, getState( ) is not intended to provide a means of synchronizing threads. It’s used for debugging or for profiling a thread’s run-time characteristics.

- The key to utilizing Java’s multithreading features effectively is to think concurrently rather than serially.

- For example, when you have two subsystems within a program that can execute concurrently, make them individual threads. With the careful use of multithreading, you can create very efficient programs.

- If you create too many threads, more CPU time will be spent in context switching than executing your program!

- Thread scheduler in java is the part of the JVM that decides which thread should run. There is no guarantee that which runnable thread will be chosen to run by the thread scheduler. Only one thread at a time can run in a single process.

- The thread scheduler mainly uses preemptive or time slicing scheduling to schedule the threads.

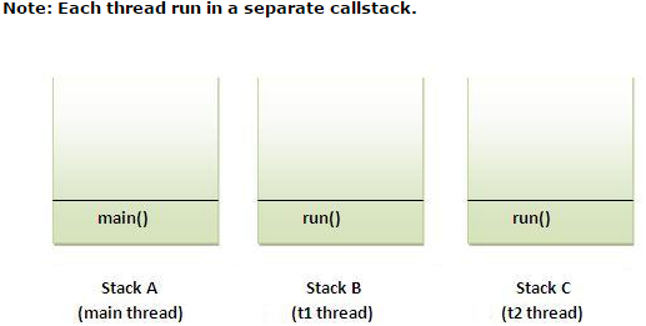

- start() method of Thread class is used to start a newly created thread. It performs following tasks:

- A new thread starts (with new callstack).

- The thread moves from New state to the Runnable state.

- When the thread gets a chance to execute, its target run() method will run.

Difference between preemptive scheduling and time slicing?

- Under preemptive scheduling, the highest priority task executes until it enters the waiting or dead states or a higher priority task comes into existence.

- Under time slicing, a task executes for a predefined slice of time and then re-enters the pool of ready tasks. The scheduler then determines which task should execute next, based on priority and other factors.

Can we start a thread twice?

- No. After starting a thread, it can never be started again.

- If you does so, an IllegalThreadStateException is thrown. In such case, thread will run once but for second time, it will throw exception.

What if we call run() method directly instead start() method?

- Each thread starts in a separate call stack.

- Invoking the run() method from main thread, the run() method goes onto the current call stack rather than at the beginning of a new call stack.

public static void main(String args[]) {

TestCallRun2 t1 = new TestCallRun2();

TestCallRun2 t2 = new TestCallRun2();

t1.run();

t2.run();

}

class TestCallRun2 extends Thread {

public void run() {

for (int i = 1; i < 5; i++) {

try {

Thread.sleep(500);

} catch (InterruptedException e) {

sysout(e);

}

System.out.println(i);

}

}

}

Output:1 2 3 4 5 1 2 3 4 5

As you can see that there is no context-switching because here t1 and t2 will be treated as normal object not thread object.

Deamon Thread

- Daemon thread in java is a service provider thread that provides services to the user thread.

- It is a low priority thread.

- Its life depend on the mercy of user threads i.e. when all the user threads dies, JVM terminates this thread automatically.

- There are many java daemon threads running automatically e.g. gc, finalizer etc.

- You can see all the detail by typing the jconsole in the command prompt. The jconsole tool provides information about the loaded classes, memory usage, running threads etc.

| public void setDaemon(boolean status) | used to mark the current thread as daemon thread or user thread. |

| public boolean isDaemon() | used to check that current is daemon. |

- To make a user thread as Daemon, it must not be started otherwise it will throw IllegalThreadStateException.

t1.start();

t1.setDaemon(true); //will throw exception here

Common Tasks - FAQ Code

Single Task - Multiple Threads

How to perform single task by multiple threads?

If you have to perform single task by many threads, have only one run() method.

class TestMultitasking1 extends Thread {

public void run() {

sysout("task one");

}

public static void main(String args[]) {

TestMultitasking1 t1 = new TestMultitasking1();

TestMultitasking1 t2 = new TestMultitasking1();

t1.start();

t2.start();

}

}

// Output:

task one task one

Multiple Task - Multiple Threads

How to perform multiple tasks by multiple threads?

If you have to perform multiple tasks by multiple threads, have multiple run() methods.

class Simple1 extends Thread {

public void run() {

System.out.println("task 1");

}

}

class Simple2 extends Thread {

public void run() {

System.out.println("task 2");

}

}

class TestMultitasking3 {

public static void main(String args[]) {

Simple1 t1 = new Simple1();

Simple2 t2 = new Simple2();

t1.start();

t2.start();

}

}

// Output:

task one

task two

Print Odd Even - without wait or synchronized

Print natural numbers 1 to 20 using two threads without using wait or synchronized where one thread prints only odd number and other thread prints only even number?

public class EvenOddPrinterWithoutWait {

static boolean flag = true;

public static void main(String[] args) {

Runnable odd = () - > {

for (int i = 1; i <= 10;) {

if (EvenOddPrinter.flag) {

i = printIt(i);

}

}

};

Runnable even = () - > {

for (int i = 2; i <= 10;) {

if (!EvenOddPrinter.flag) {

i = printIt(i);

}

}

};

Thread t1 = new Thread(odd, "Odd");

Thread t2 = new Thread(even, "Even");

t1.start();

t2.start();

}

private static int printIt(int i) {

System.out.println(Thread.currentThread().getName() + "\t : " + i);

EvenOddPrinter.flag = !EvenOddPrinter.flag;

return (i + 2);

}

}

Print Odd Even - using wait or synchronized

Print natural numbers 1 to 20 using two threads using wait or synchronized where one thread prints only odd number and other thread prints only even number?

public class EvenOddPrinterWithWait {

public static final int limit = 20;

public static void main(String...args) {

// Runnable for even printer

final SharedPrinterWithWait counterObj = new SharedPrinterWithWait(20);

Runnable evenNoPrinter = () - > {

int num = 0;

while (true) {

if (num >= limit) {

break;

}

num = counterObj.printNextEven();

}

};

// Runnable for odd printer

Runnable oddNoPrinter = () - > {

int num = 0;

while (true) {

if (num >= limit) {

break;

}

num = counterObj.printNextOdd();

}

};

new Thread(oddNoPrinter).start();

new Thread(evenNoPrinter).start();

}

}

public class SharedPrinterWithWait {

private int count = 0;

private boolean isEven = true;

private int upperLimit;

SharedPrinterWithWait(int limit) {

upperLimit = limit;

}

public synchronized int printNextOdd() {

// Wait until odd is available.

while (isEven) {

try {

wait();

} catch (InterruptedException e) {}

}

count++;

if (count <= upperLimit) {

printEven(count);

}

// Toggle status.

isEven = true;

// Notify even printer for status changed.

notifyAll();

return count;

}

public synchronized int printNextEven() {

// Wait until even is available.

while (!isEven) {

try {

wait();

} catch (InterruptedException e) {}

}

count++;

if (count <= upperLimit) {

printOdd(count);

}

// Toggle status.

isEven = false;

// Notify odd printer for status changed.

notifyAll();

return count;

}

public void printOdd(int num) {

System.out.println("ODD\t ## " + num);

}

public void printEven(int num) {

System.out.println("EVEN\t ## " + num);

}

}

Print Odd Even - using semaphore

Print natural numbers 1 to 20 using two threads using semaphore

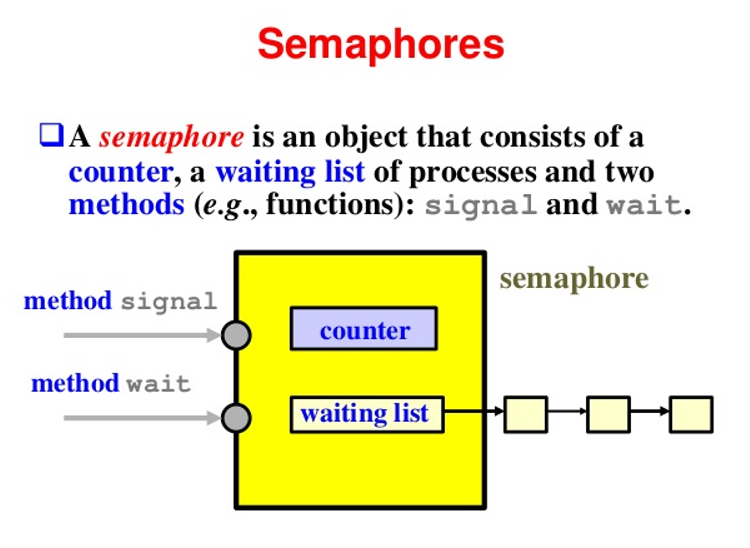

- A semaphore controls access to a shared resource through the use of a counter. If the counter is greater than zero, then access is allowed. If it is zero, then access is denied.

- Here we have two threads. Both the threads have an object of the SharedPrinter class. The SharedPrinter class will have two semaphores, semOdd and semEven which will have 1 and 0 permits to start with. This will ensure that odd number gets printed first.

- To print an odd number, the acquire() method is called on semOdd, and since the initial permit is 1, it acquires the access successfully, prints the odd number and calls release() on semEven.

- Calling release() will increment the permit by 1 for semEven, and the even thread can then successfully acquire the access and print the even number.

public static void main(String[] args) {

int max = 15;

SharedPrinter sp = new SharedPrinter();

Thread odd = new Thread(new OddRunnable(sp, max), "Odd");

Thread even = new Thread(new EvenRunnable(sp, max), "Even");

odd.start();

even.start();

}

public class OddRunnable implements Runnable {

private SharedPrinter sp;

private int max;

OddRunnable(SharedPrinter sp, int max) {

this.sp = sp;

this.max = max;

}

@Override

public void run() {

for (int i = 1; i <= max; i = i + 2) {

sp.printOddNum(i);

}

}

}

public class EvenRunnable implements Runnable {

private SharedPrinter sp;

private int max;

EvenRunnable(SharedPrinter sp, int max) {

this.sp = sp;

this.max = max;

}

@Override

public void run() {

for (int i = 2; i <= max; i = i + 2)

sp.printEvenNum(i);

}

}

public class SharedPrinter {

private Semaphore semEven = new Semaphore(0);

private Semaphore semOdd = new Semaphore(1);

void printEvenNum(int num) {

try {

semEven.acquire();

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

System.out.println(String.format("%s\t: s", Thread.currentThread().getName(), num));

semOdd.release();

}

void printOddNum(int num) {

try {

semOdd.acquire();

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

System.out.println(String.format("%s\t: %s", Thread.currentThread().getName(), num));

semEven.release();

}

}

Java Runtime

- Java Runtime class is used to interact with java runtime environment.

- Java Runtime class provides methods to execute a process, invoke GC, get total and free memory etc.

- There is only one instance of java.lang.Runtime class available for one java application.

- The Runtime.getRuntime() method returns the singleton instance of Runtime class.

public class ShutdownCommand {

public static void main(String[] args) {

Runtime r = Runtime.getRuntime();

System.out.println("Total Memory: " + r.totalMemory());

System.out.println("Free Memory: " + r.freeMemory());

for (int i = 0; i < 10000; i++) {

new ShutdownCommand();

}

System.out.println("After 10000 instance, Free Memory: " + r.freeMemory());

System.gc();

System.out.println("After gc(), Free Memory: " + r.freeMemory());

System.out.println(r.availableProcessors()); // Number Of Processors

// will open a new notepad

try {

r.exec("notepad");

} catch (IOException e) {

e.printStackTrace();

}

r.exec("shutdown -s -t 0");

// -s to shutdown, -r to restart system and -t switch to specify time delay.

}

}

| Method | Description |

|---|---|

| public static Runtime getRuntime() | returns the instance of Runtime class. |

| public void exit(int status) | terminates the current virtual machine. |

| public void addShutdownHook(Thread hook) | registers new hook thread. |

| public Process exec(String command) throws IOException | executes given command in a separate process. |

| public int availableProcessors() | returns no. of available processors. |

| public long freeMemory() | returns amount of free memory in JVM. |

| public long totalMemory() | returns amount of total memory in JVM. |

Java Concurrency

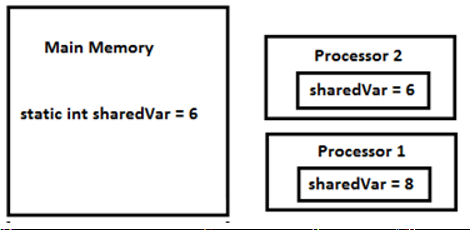

volatile Keyword

- Using volatile is yet another way (like synchronized, atomic wrapper) of making class thread safe.

- volatile is used to indicate that a variable’s value will be modified by different threads.

- Declaring a volatile Java variable means:

- The value of this variable will never be cached thread-locally: all reads and writes will go straight to “main memory”;

- Access to the variable acts as though it is enclosed in a synchronized block, synchronized on itself.

class SharedObj {

// Changes made to sharedVar in one thread may not immediately reflect in other thread

static int sharedVar = 6;

// static volatile int sharedVar = 6;

}

- Suppose that two threads are working on SharedObj. If two threads run on different processors each thread may have its own local copy of sharedVar.

- If one thread modifies its value the change might not reflect in the original one in the main memory instantly. This depends on the write policy of cache. Now the other thread is not aware of the modified value which leads to data inconsistency.

Write Policy

- The timing of writing data from cache to main memory is known as the write policy.

- write-through cache, every write to the cache causes a write to main memory.

- write-back or copy-back cache, writes are not immediately mirrored to the main memory, and the cache instead tracks which locations have been written over, marking them as dirty.

- Note that write of normal variables without any synchronization actions, might not be visible to any reading thread (this behavior is called sequential consistency).

- Have only one copy of static variables in main memory.

volatile vs synchronized

- Two important features of locks and synchronization.

- Mutual Exclusion: Only one thread or process can execute a block of code (critical section) at a time.

- Visibility: It means that changes made by one thread to shared data are visible to other threads.

- Synchronized : both mutual exclusion and visibility

- Volatile : visibility and not atomicity

| Characteristic | Synchronized | Volatile |

|---|---|---|

| Type of target variable | Object | Object or primitive |

| null allowed? | No | Yes |

| Can block? (hold on to any lock) | Yes | No |

| All cached variables synchronized with main memory on access? | Yes | Yes |

| When synchronization happens | When you explicitly enter/exit a synchronized block | Whenever a volatile variable is accessed. |

| Can be used to make several operations as atomic operation? | Yes | Atomic get-set of volatiles |

| Used on | variable, method, class | variables only |

Read more about cached variables synchronized

- x++ is a read-modify-write sequence of operations that must execute atomically.

- Because accessing a volatile variable never holds a lock, it is not suitable for cases where we want to read-update-write as an atomic operation (unless we’re prepared to “miss an update”);

- A volatile variable that is an object reference may be null (you’re effectively synchronizing on the reference, not the actual object). Attempting to synchronize on a null object will throw a NullPointerException.

- Use of volatile keyword also prevents compiler or JVM from the reordering of code or moving away them from synchronization barrier.

- In Java, reads and writes are atomic for all variables declared using Java volatile keyword (including long and double variables).

- volatile keyword on variables reduces the risk of memory consistency errors as any write to a volatile variable in Java establishes a happens-before relationship with subsequent reads of that same variable.

- Java volatile keyword doesn’t mean atomic, its common misconception that after declaring volatile ++ will be atomic, to make the operation atomic you still need to ensure exclusive access using synchronized method or block in Java.

- If a variable is not shared between multiple threads, you don’t need to use volatile keyword with that variable. volatile is not a replacement of synchronized keyword but can be used as an alternative in certain cases.

- The short time gap in between the reading of the volatile variable and the writing of its new value, creates an race condition where multiple threads might read the same value of the volatile variable, generate a new value for the variable, and when writing the value back to main memory - overwrite each other’s values. The situation where multiple threads are incrementing the same counter is exactly such a situation where a volatile variable is not enough.

When to use Volatile

- You can use Volatile variable if you want to read and write long and double variable atomically. long and double both are 64 bit data type and by default writing of long and double is not atomic and platform dependence. Many platform perform write in long and double variable 2 step, writing 32 bit in each step, due to this its possible for a Thread to see 32 bit from two different write. You can avoid this issue by making long and double variable volatile in Java.

- volatile variable can be used to inform the compiler that a particular field is subject to be accessed by multiple threads, which will prevent the compiler from doing any reordering or any kind of optimization which is not desirable in a multi-threaded environment. Without volatile variable compiler can re-order the code, free to cache value of volatile variable instead of always reading from main memory. like following example without volatile variable may result in an infinite loop without the volatile modifier, it’s not guaranteed that one Thread sees the updated value of isActive from other thread. The compiler is also free to cache value of isActive instead of reading it from main memory in every iteration. By making isActive a volatile variable you avoid these issues.

private boolean isActive = true;

public void printMessage() {

while (isActive)

System.out.println("Thread is Active");

}

- Another place where a volatile variable can be used is to fixing double-checked locking in Singleton pattern. As we discussed in Why should you use Enum as Singleton that double checked locking was broken in Java 1.4 environment.

volatile vs transient

| transient | volatile |

|---|---|

| used along with instance variables to exclude them from serialization process. if a field is transient its value will not be persisted. | used to indicate compiler and JVM that always read its value from main memory and follow happens-before relationship on visibility of volatile variable among multiple thread. |

| can not be used along with static keyword | can be used along with static. |

| transient variables are initialized with default value during de-serialization and their assignment or restoration of value has to be handled by application code. |

atomic variables

- The java.util.concurrent.atomic package defines classes that support atomic operations on single variables.

- All classes have get and set methods that work like reads and writes on volatile variables.

- set has a happens-before relationship with any subsequent get on the same variable.

- The atomic compareAndSet method also has these memory consistency features, as do the simple atomic arithmetic methods that apply to integer atomic variables.

- In below example, If two threads try to get and update the value at the same time, it may result in lost updates.

class Counter {

private int c = 0;

public void increment() {

c++;

}

public void decrement() {

c--;

}

public int value() {

return c;

}

}

class SynchronizedCounter {

private int c = 0;

public synchronized void increment() {

c++;

}

public synchronized void decrement() {

c--;

}

public synchronized int value() {

return c;

}

}

- For this simple class, synchronization is an acceptable solution.

- For a more complicated class, we can use atomic wrappers/classes. Example, replace int with AtomicInteger.

Locks

- One of the ways to manage access to an object is to use locks by using the synchronized keyword.

- The synchronized keyword ensures that only one thread can enter the method at one time.

- Additionally, we need to add the volatile keyword to ensure proper reference visibility among threads.

Using locks solves the problem. However, performance takes a hit.

- When multiple threads attempt to acquire a lock, one of them wins, while the rest of the threads are either blocked or suspended. The process of suspending and then resuming a thread is very expensive and affects the overall efficiency of the system.

- In a small program, such as the counter, the time spent in context switching may become much more than actual code execution, thus greatly reducing overall efficiency.

import java.util.concurrent.atomic.AtomicInteger;

class AtomicCounter {

private AtomicInteger c = new AtomicInteger(0);

public void increment() {

c.incrementAndGet();

}

public void decrement() {

c.decrementAndGet();

}

public int value() {

return c.get();

}

}

AtomicBoolean

AtomicInteger

AtomicIntegerArray

AtomicIntegerFieldUpdater

AtomicLong

AtomicLongArray

AtomicLongFieldUpdater

AtomicReference

LongAdder

Atomic Operations

- There is a branch of research focused on creating non-blocking algorithms (lock and wait-free algorithms) for concurrent environments. These algorithms exploit low-level atomic machine instructions such as compare-and-swap (CAS), to ensure data integrity.

- A typical CAS operation works on three operands:

- The memory location on which to operate (M)

- The existing expected value (A) of the variable

- The new value (B) which needs to be set

- The CAS operation updates atomically the value in M to B, but only if the existing value in M matches A, otherwise no action is taken. In both cases, the existing value in M is returned. This combines three steps – getting the value, comparing the value and updating the value – into a single machine level operation.

- When multiple threads attempt to update the same value through CAS, one of them wins and updates the value. However, unlike in the case of locks, no other thread gets suspended; instead, they’re simply informed that they did not manage to update the value. The threads can then proceed to do further work and context switches are completely avoided.

- One other consequence is that the core program logic becomes more complex. This is because we have to handle the scenario when the CAS operation didn’t succeed. We can retry it again and again till it succeeds, or we can do nothing and move on depending on the use case.

Atomic Variables in Action

- The most commonly used atomic variable classes in Java are AtomicInteger, AtomicLong, AtomicBoolean, and AtomicReference.

- These classes represent an int, long, boolean and object reference respectively which can be atomically updated.

| get() | gets the value from the memory, so that changes made by other threads are visible; equivalent to reading a volatile variable |

| set() | writes the value to memory, so that the change is visible to other threads; equivalent to writing a volatile variable |

| lazySet() | eventually writes the value to memory, may be reordered with subsequent relevant memory operations. One use case is nullifying references, for the sake of garbage collection, which is never going to be accessed again. In this case, better performance is achieved by delaying the null volatile write. |

| compareAndSet() | same as described in above section, returns true when it succeeds, else false |

| weakCompareAndSet() | same as compareAndSet, but weaker in the sense, that it does not create happens-before orderings. This means that it may not necessarily see updates made to other variables |

| incrementAndGet() | |

| decrementAndGet() | |

| getAndDecrement() | The setters operations are implemented using compareAndSet. |

| getAndIncrement() | |

| getAndAdd(int i) | |

| addAndGet() |

public class SafeCounterWithoutLock {

private final AtomicInteger counter = new AtomicInteger(0);

public int getValue() {

return counter.get();

}

public void increment() {

while (true) {

int existingValue = getValue();

int newValue = existingValue + 1;

if (counter.compareAndSet(existingValue, newValue)) {

return;

}

}

}

}

- We retry the compareAndSet operation and again on failure, since we want to guarantee that the call to the increment method always increases the value by 1.

- When data (typically a variable) can be accessed by several threads, you must synchronize the access to the data to ensure visibility and correctness.

- This version is faster than the synchronized one and is also thread safe.

- This seems to be complicated, but this is the cost of non-blocking algorithms. When we detect collision, we retry until the operation succeeded. This is the common schema for non-blocking algorithms.

public class Stack {

private final AtomicReference < Element > head = new AtomicReference < Element > (null);

public void push(String value) {

Element newElement = new Element(value);

while (true) {

Element oldHead = head.get();

newElement.next = oldHead;

//Trying to set the new element as the head

if (head.compareAndSet(oldHead, newElement)) {

return;

}

}

}

public String pop() {

while (true) {

Element oldHead = head.get();

if (oldHead == null) {

return null;

} //The stack is empty

Element newHead = oldHead.next;

//Trying to set the new element as the head

if (head.compareAndSet(oldHead, newHead)) {

return oldHead.value;

}

}

}

private static final class Element {

private final String value;

private Element next;

private Element(String value) {

this.value = value;

}

}

}

- To conclude, atomic variables classes are a really good way to implement non-blocking algorithms and are also a very good alternative to volatile variables, because they can provide atomicity and visibility.

- The main purpose behind building atomic classes is to implement nonblocking data structures and their related infrastructure classes. Atomic classes do not serve as replacements for java.lang.Integer and related classes.

- Most java.util.concurrent package classes use atomic variables instead of synchronization, either directly or indirectly. Atomic variables also are used to achieve higher throughput, which means higher application server performance.

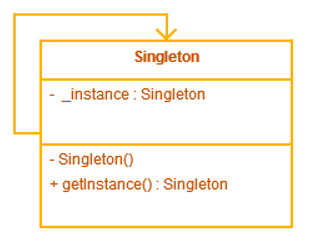

Singleton and DCL

- One of the key challenge faced is how to keep Singleton class as Singleton i.e. how to prevent multiple instances of a Singleton due to whatever reasons.

- Double checked locking of Singleton is a way to ensure only one instance of Singleton class is created through application life cycle. As name suggests, in double checked locking, code checks for an existing instance of Singleton class twice with and without locking to double ensure that no more than one instance of singleton gets created. By the way, it was broken before Java fixed its memory models issues in JDK 1.5.

class Singleton {

private volatile static Singleton _instance;

private Singleton() {} // private constructor

/*1st version: creates multiple instance if two thread access*/

public static Singleton getInstance() {

if (_instance == null) {

_instance = new Singleton();

}

return _instance;

}

/*

* 2nd version : only creates one instance of Singleton on concurrent environment

* but unnecessarily expensive due to cost of synchronization at every call.

*/

public static synchronized Singleton getInstanceTS() {

if (_instance == null) {

_instance = new Singleton();

}

return _instance;

}

/*

* 3rd version : An implementation of double checked locking of Singleton.

* Intention is to minimize cost of synchronization and improve performance,

* by only locking critical section of code, the code which creates instance of Singleton class.

* By the way this is still broken, if we don't make _instance volatile, as another thread can

* see a half initialized instance of Singleton.

*/

public static Singleton getInstanceDC() {

if (_instance == null) { // Single Checked

synchronized(Singleton.class) {

if (_instance == null) { // Double checked

_instance = new Singleton();

}

}

}

return _instance;

}

}

Why you need Double checked Locking of Singleton Class?

- Though synchronized version is thread-safe and solves issue of multiple instance, it’s not very efficient.

- cost of synchronization is there every time you call this method, but synchronization is only needed on first class, when Singleton instance is created.

DCL was broken before Java 5

- Prior to Java 5 double checked locking was broken due to memory model, but its fixed.

- Now, with happens-before guarantee, all the write will happen on volatile _instance before any read of _instance variable, you can safely assume that this will work.

- By the way this is not the best way to create thread-safe Singleton, you can use Enum as Singleton, which provides in-built thread-safety during instance creation. Another way is to use static holder pattern.

- The DCL idiom was designed to support lazy initialization, which occurs when a class defers initialization of an owned object until it is actually needed.

Why would you want to defer initialization?

- Perhaps creating a Resource is an expensive operation, and users of SomeClass might not actually call getResource() in any given run. In that case, you can avoid creating the Resource entirely.

- Delaying some initialization operations until a user actually needs their results can help programs start up faster.

- Unfortunately, synchronized methods run much slower – as much as 100 times slower – than ordinary unsynchronized methods. One of the motivations for lazy initialization is efficiency, but it appears that in order to achieve faster program startup, you have to accept slower execution time once the program starts. That doesn’t sound like a great trade-off.

Java Enum and Singleton Pattern

- Below four things ensure that no instances of an enum type exist beyond those defined by the enum constants.

- An enum type has no instances other than those defined by its enum constants.

- It is a compile-time error to attempt to explicitly instantiate an enum type.

- The final clone method in Enum ensures that enum constants can never be cloned, and the special treatment by the serialization mechanism ensures that duplicate instances are never created as a result of deserialization.

- Reflective instantiation of enum types is prohibited.

- Enum Singletons are easy to write

- By default, creation of Enum instance is thread safe but any other method on Enum is programmers responsibility.

/*Singleton pattern example using Java Enum*/

public enum EasySingleton {

INSTANCE("Sunday Funday", true);

private String daysGreeting;

private boolean isWeekend;

WeekDays(String daysGreeting, boolean isW) {

this(daysGreeting);

this.isWeekend = isWeekend;

}

}

/*Singleton pattern example with static factory method*/

public class Singleton {

//initailzed during class loading

private static final Singleton INSTANCE = new Singleton();

private Singleton() {} // private constructor, no other instance

public static Singleton getSingleton() {

return INSTANCE;

}

}

/* Singleton pattern example with Double checked Locking*/

public class DoubleCheckedLockingSingleton{

private volatile DoubleCheckedLockingSingleton INSTANCE;

private DoubleCheckedLockingSingleton(){}

public DoubleCheckedLockingSingleton getInstance(){

if(INSTANCE == null){

synchronized(DoubleCheckedLockingSingleton.class){ //double checking Singleton instance

if(INSTANCE == null){ INSTANCE = new DoubleCheckedLockingSingleton(); }

}

}

return INSTANCE;

}

}

- Enum Singletons handled Serialization by themselves

- Another problem with conventional Singletons are that once you implement serializable interface they are no longer remain Singleton because readObject() method always return a new instance just like constructor in Java. you can avoid that by using readResolve() method and discarding newly created instance by replacing with Singeton as shwon in below example.

- This can become even more complex if your Singleton Class maintains state, as you need to make them transient, but with Enum Singleton, Serialization is guarnateed by JVM.

//readResolve to prevent another instance of Singleton

private Object readResolve() {

return INSTANCE;

}

- Creation of Enum instance is thread-safe

- Since creation of Enum instance is thread-safe by default you don’t need to worry about double checked locking.

- In summary, given the Serialzation and thraead-safety guaranteed and with couple of line of code enum Singleton pattern is best way to create Singleton in Java 5 world.

DCL - Meet the Java Memory Model

- More accurately, DCL is not guaranteed to work. To understand why, we need to look at the relationship between the JVM and the computer environment on which it runs.

- Unlike most other languages, Java defines its relationship to the underlying hardware through a formal memory model that is expected to hold on all Java platforms, enabling Java’s promise of “Write Once, Run Anywhere.”

- When running in a synchronous (single-threaded) environment, a program’s interaction with memory is quite simple, or at least it appears so. Programs store items into memory locations and expect that they will still be there the next time those memory locations are examined. Though we think of programs as executing sequentially – in the order specified by the program code – that doesn’t always happen. Compilers, processors, and caches are free to take all sorts of liberties with our programs and data, as long as they don’t affect the result of the computation.

- For example, compilers can generate instructions in a different order from the obvious interpretation the program suggests and store variables in registers instead of memory; processors may execute instructions in parallel or out of order; and caches may vary the order in which writes commit to main memory. The JMM says that all of these various reorderings and optimizations are acceptable, so long as the environment maintains as-if-serial semantics – that is, so long as you achieve the same result as you would have if the instructions were executed in a strictly sequential environment.

- With multithreaded programs, the situation is quite different – one thread can read memory locations that another thread has written. If thread A modifies some variables in a certain order, in the absence of synchronization, thread B may not see them in the same order – or may not see them at all, for that matter.

- That could result because

- the compiler reordered the instructions or temporarily stored a variable in a register and wrote it out to memory later;

- or because the processor executed the instructions in parallel or in a different order than the compiler specified;

- or because the instructions were in different regions of memory, and the cache updated the corresponding main memory locations in a different order than the one in which they were written.

Whatever the circumstances, multithreaded programs are inherently less predictable, unless you explicitly ensure that threads have a consistent view of memory by using synchronization.

What does synchronized really mean?

- Java treats each thread as if it runs on its own processor with its own local memory, each talking to and synchronizing with a shared main memory.

- Even on a single-processor system, that model makes sense because of the effects of memory caches and the use of processor registers to store variables. When a thread modifies a location in its local memory, that modification should eventually show up in the main memory as well, and the JMM defines the rules for when the JVM must transfer data between local and main memory.

- The Java architects realized that an overly restrictive memory model would seriously undermine program performance. They attempted to craft a memory model that would allow programs to perform well on modern computer hardware while still providing guarantees that would allow threads to interact in predictable ways.

- Java’s primary tool for rendering interactions between threads predictably is the synchronized keyword. Many programmers think of synchronized strictly in terms of enforcing a mutual exclusion semaphore (mutex) to prevent execution of critical sections by more than one thread at a time. Unfortunately, that intuition does not fully describe what synchronized means.

- The semantics of synchronized do indeed include mutual exclusion of execution based on the status of a semaphore, but they also include rules about the synchronizing thread’s interaction with main memory. In particular, the acquisition or release of a lock triggers a memory barrier – a forced synchronization between the thread’s local memory and main memory. (Some processors – like the Alpha – have explicit machine instructions for performing memory barriers.)

- When a thread exits a synchronized block, it performs a write barrier – it must flush out any variables modified in that block to main memory before releasing the lock.

- Similarly, when entering a synchronized block, it performs a read barrier – it is as if the local memory has been invalidated, and it must fetch any variables that will be referenced in the block from main memory.

- The proper use of synchronization guarantees that one thread will see the effects of another in a predictable manner. Only when threads A and B synchronize on the same object will the JMM guarantee that thread B sees the changes made by thread A, and that changes made by thread A inside the synchronized block appear atomically to thread B (either the whole block executes or none of it does).

- Furthermore, the JMM ensures that synchronized blocks that synchronize on the same object will appear to execute in the same order as they do in the program.

Synchronized block = enforcing a mutual exclusion semaphore (mutex) + memory barrier

So what’s broken about DCL?

- DCL relies on an unsynchronized use of the resource field. That appears to be harmless, but it is not.

- To see why, imagine that thread A is inside the synchronized block, executing the statement resource = new Resource(); while thread B is just entering getResource(). Consider the effect on memory of this initialization. Memory for the new Resource object will be allocated; the constructor for Resource will be called, initializing the member fields of the new object; and the field resource of SomeClass will be assigned a reference to the newly created object.

However, since thread B is not executing inside a synchronized block, it may see these memory operations in a different order than the one thread A executes. It could be the case that B sees these events in the following order (and the compiler is also free to reorder the instructions like this): allocate memory, assign reference to resource, call constructor. Suppose thread B comes along after the memory has been allocated and the resource field is set, but before the constructor is called. It sees that resource is not null, skips the synchronized block, and returns a reference to a partially constructed Resource! Needless to say, the result is neither expected nor desired.

When presented with this example, many people are skeptical at first. Many highly intelligent programmers have tried to fix DCL so that it does work, but none of these supposedly fixed versions work either. It should be noted that DCL might, in fact, work on some versions of some JVMs – as few JVMs actually implement the JMM properly. However, you don’t want the correctness of your programs to rely on implementation details – especially errors – specific to the particular version of the particular JVM you use.

Other concurrency hazards are embedded in DCL – and in any unsynchronized reference to memory written by another thread, even harmless-looking reads. Suppose thread A has completed initializing the Resource and exits the synchronized block as thread B enters getResource(). Now the Resource is fully initialized, and thread A flushes its local memory out to main memory. The resource’s fields may reference other objects stored in memory through its member fields, which will also be flushed out. While thread B may see a valid reference to the newly created Resource, but as it didn’t perform a read barrier it could still see stale values of resource’s member fields.

Volatile doesn’t mean what you think, either

A commonly suggested nonfix is to declare the resource field of SomeClass as volatile. However, while the JMM prevents writes to volatile variables from being reordered with respect to one another and ensures that they are flushed to main memory immediately, it still permits reads and writes of volatile variables to be reordered with respect to nonvolatile reads and writes. That means – unless all Resource fields are volatile as well – thread B can still perceive the constructor’s effect as happening after resource is set to reference the newly created Resource.

Alternatives to DCL

- The most effective way to fix the DCL idiom is to avoid it. The simplest way to avoid it, of course, is to use synchronization. Whenever a variable written by one thread is being read by another, you should use synchronization to guarantee that modifications are visible to other threads in a predictable manner.

- Another option for avoiding the problems with DCL is to drop lazy initialization and instead use eager initialization. Rather than delay initialization of resource until it is first used, initialize it at construction. The class loader, which synchronizes on the classes’ Class object, executes static initializer blocks at class initialization time. That means that the effect of static initializers is automatically visible to all threads as soon as the class loads.

ThreadGroup

- Java provides a convenient way to group multiple threads in a single object.

- In such way, we can suspend, resume or interrupt group of threads by a single method call.

- For example, imagine a program in which one set of threads is used for printing a document, another set is used to display the document on the screen, and another set saves the document to a disk file. If printing is aborted, you will want an easy way to stop all threads related to printing. Thread groups offer this convenience.

| Methods of ThreadGroup | |

|---|---|

| ThreadGroup(String name) | creates a thread group with given name. |

| ThreadGroup(ThreadGroup parent,String name) | creates a thread group with given parent group and name. |

| int activeCount() | returns no. of threads running in current group. |

| int activeGroupCount() | returns a no. of active group in this thread group. |

| void destroy() | destroys this thread group and all its sub groups. |

| String getName() | returns the name of this group. |

| ThreadGroup getParent() | returns the parent of this group. |

| void interrupt() | interrupts all threads of this group. |

| void list() | prints information of this group to standard console. |

- Java thread group is implemented by

java.lang.ThreadGroupclass.

ThreadGroup tg1 = new ThreadGroup("Group A");

Thread t1 = new Thread(tg1, new MyRunnable(), "one");

Thread t2 = new Thread(tg1, new MyRunnable(), "two"); //MyRunnable implements Runnable interface

Now we can interrupt all threads by a single line of code only.

Thread.currentThread().getThreadGroup().interrupt();

public class ThreadGroupDemo implements Runnable {

public void run() {

System.out.println(Thread.currentThread().getName());

}

public static void main(String[] args) {

ThreadGroupDemo runnable = new ThreadGroupDemo();

ThreadGroup tg1 = new ThreadGroup("Parent ThreadGroup");

Thread t1 = new Thread(tg1, runnable, "one");

t1.start();

Thread t2 = new Thread(tg1, runnable, "two");

t2.start();

Thread t3 = new Thread(tg1, runnable, "three");

t3.start();

System.out.println("Thread Group Name: " + tg1.getName());

tg1.list();

}

}

// Output :

one two three Thread Group Name: Parent ThreadGroup

java.lang.ThreadGroup[name = Parent ThreadGroup, maxpri = 10]

Thread[one, 5, Parent ThreadGroup]

Thread[two, 5, Parent ThreadGroup]

Thread[three, 5, Parent ThreadGroup]

What is Thread Group? Why it’s advised not to use it?

- ThreadGroup is a class which was intended to provide information about a thread group. ThreadGroup API is weak and it doesn’t have any functionality that is not provided by Thread. Two of the major feature it had are to get the list of active threads in a thread group and to set the uncaught exception handler for the thread. But Java 1.5 has added setUncaughtExceptionHandler (UncaughtExceptionHandler eh) method using which we can add uncaught exception handler to the thread. So ThreadGroup is obsolete and hence not advised to use anymore.

t1.setUncaughtExceptionHandler(new UncaughtExceptionHandler() {

@Override

public void uncaughtException(Thread t, Throwable e) {

System.out.println("exception occured:" + e.getMessage());

}

});

Java Shutdown Hook

- The shutdown hook can be used to perform cleanup resource or save the state when JVM shuts down normally or abruptly.

- Performing clean resource means closing log file, sending some alerts or something else.

- So, if you want to execute some code before JVM shuts down, use shutdown hook.

When does the JVM shut down?

- user presses ctrl+c on the command prompt

- System.exit(int) method is invoked

- user logoff

- user shutdown etc.

The addShutdownHook() method of Runtime class is used to register the thread with the Virtual Machine.

public void addShutdownHook(Thread hook){}

The object of Runtime class can be obtained by calling the static factory method getRuntime(). The method that returns the instance of a class is known as factory method.

Runtime r = Runtime.getRuntime();

class MyThread extends Thread{

public void run(){ System.out.println("shut down hook task completed.."); }

}

public class TestShutdown1{

public static void main(String[] args)throws Exception {

Runtime r = Runtime.getRuntime();

r.addShutdownHook(new MyThread());

System.out.println("Now main sleeping... press ctrl+c to exit");

try{Thread.sleep(3000);}catch (Exception e) {}

}

}

// Output:

Now main sleeping... press ctrl+c to exit

shut down hook task completed..

Note: The shutdown sequence can be stopped by invoking the halt(int) method of Runtime class.

Executor API

- The Executor framework is a framework for standardizing invocation, scheduling, execution, and control of asynchronous tasks according to a set of execution policies.